The recent Adobe MAX conference demonstrated that Adobe is making a serious commitment to XR creation tools — in fact, the company has slowly collected a suite of 3D tools via acquisitions that make it a major player in XR. Using Adobe tools, you can create 3D models and sculptures in VR using Medium, create materials and textures using the Substance suite, leverage 3D character models and animations from Mixamo, and create renderings of 3D environments using Dimension.

In addition to these, Adobe moved into the AR space with the iOS-only launch of Aero at last year’s Adobe MAX. This year, the Windows and MacOS versions of Aero came out of private beta, so now every Creative Cloud user can jump into AR creation from their desktop.

Desktop Option

Why does desktop authoring for Aero make a difference? In part it’s an ergonomics issue. Authoring a complex AR scene is time consuming, and a mobile device is not necessarily the best tool to use for iterating through design options. On mobile, it can be cumbersome to move files around. If you’re using Photoshop or other local assets for your AR scene, it’s more efficient to just open them in the desktop app, rather than uploading to Creative Cloud or otherwise transferring to mobile.

This file management question might be made easier on mobile users now that there is a mobile version of Photoshop and Illustrator available. And on the other hand, it’s still much more sensible to test an AR experience on the kind of mobile device that might ultimately host it. But for authoring, I still prefer the focused space of a desktop.

Functionally, there is no difference between the iOS and PC/Mac version of Aero, so it’s more a matter of preference and workflow that will dictate which works best for you.

Aero Use Case

Another great point about Aero is that it really opens up AR authoring to a slightly different audience than most other AR tools out there. If you want to build a full interactive AR experience for training, or a gaming application, you’ll use a full game engine such as Unity or possibly a WebAR workflow, while if you want to share quick, often funny AR ideas with friends, using Spark Studio or Snap Studio makes a lot of sense.

But if you’re interested in a narrative or artistic application of AR, neither of these varieties of tools is quite right — the game engine approach takes significant skills development in programming or use of a 3D toolset which is outside of the experience of many artists and authors, while the social-media focused tools don’t have the flexibility to create more in-depth works. Aero hits the right balance — supporting more complicated creations while ensuring that the toolset is intuitive and usable by a wider range of creatives.

Creating with Aero

Let’s talk about what the major features of Aero are and how to use them — on any platform.

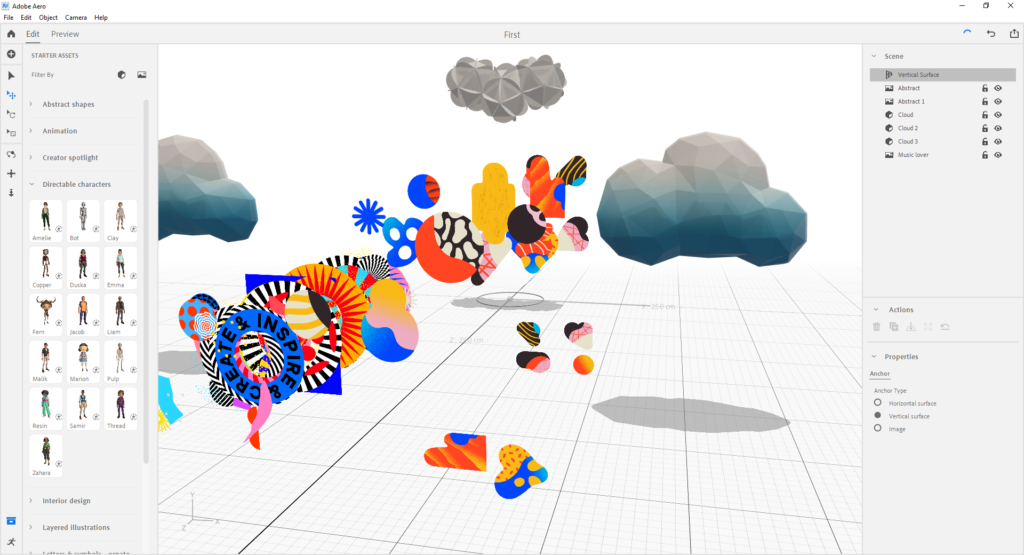

There are two major aspects of creating an AR scene with Aero. Firstly, you can add assets (2d, 3d, or audio) — either via import or by using the Starter Assets provided by Adobe. If you’re using your own assets, Aero supports import of Photoshop and Illustrator files, standard image files and OBJ, GLB, and glTF files.

The Starter Assets provide a nice set of options to work with while you get to know the platform, and include a range of simple models such as abstract shapes and low-poly nature models to more complex options, including rigged characters with a range of Mixamo animations.

You choose to anchor each asset to a horizontal plane, vertical plane, or image target. Then, you can use a simple set of tools to rotate, scale and move your image or model relative to the anchor.

It’s straightforward to create a simple object placement experience, and some artists have created some very interesting environments by arranging 2D images in creative ways — separating out the layers of a Photoshop or Illustrator document to make them dimensional.

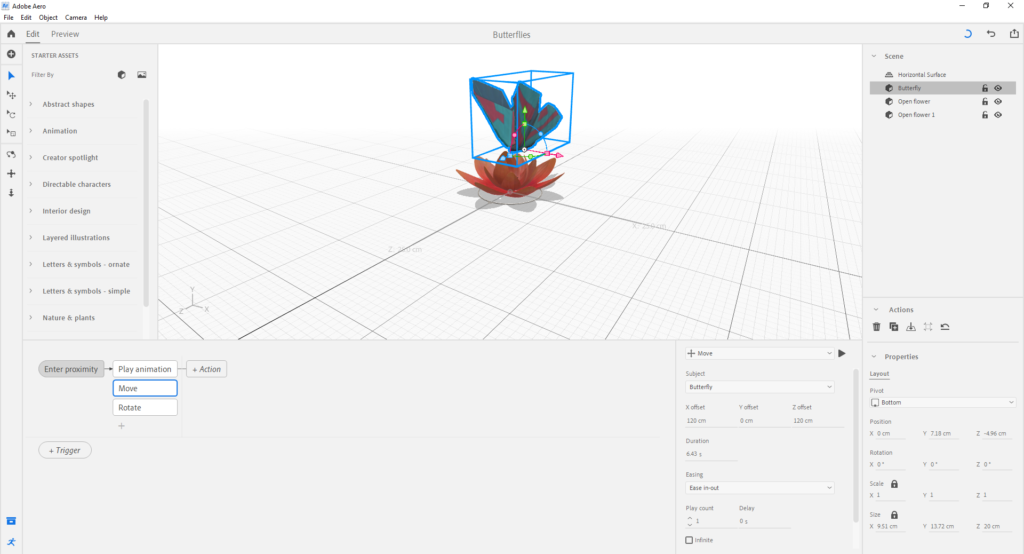

The second aspect to scene authorship is optional, and involves creating behaviors so that the scene is more interactive. There are only three types of behavior triggers currently: Start, Tap, and Enter Proximity. These are pretty minimal right now, but it’s likely that Adobe will add some more options as Aero moves towards release. Once the trigger condition is met — for instance the user has tapped the device screen, you can set a number of actions to take, such as playing an audio clip or animation, showing or hiding the model. You take multiple actions at a time, and It’s also possible to set off a chain of actions, one after another, stemming from a single trigger.

Limitations

Right now, the behavior system seems to be the weakest part of the application. As I tried combinations of triggers and actions, I quickly came across seemingly simple interactions that I wasn’t able to successfully create using the current behavior system.

In one experiment, I used the origami butterfly model included in the Starter Assets, and tried to create a simple scene where the butterfly would flutter away if the user got too close. It was easy enough to ‘hard-code’ the path of the butterfly and play a flying animation when the Enter Proximity trigger fired. But I could find no way to change the direction of the butterfly each time the trigger condition was satisfied — instead, the butterfly kept moving linearly, following the rule specified in the behavior.

If it were possible to move the butterfly in relationship to itself, rather than the scene anchor, this issue would be resolved. Similarly, being able to store and modify a variable or introduce some randomness would be helpful. I suspect these options will follow along eventually, but for the majority of Aero creators who are working on narrative or artistic expressions, interactivity improvements may not be a priority.

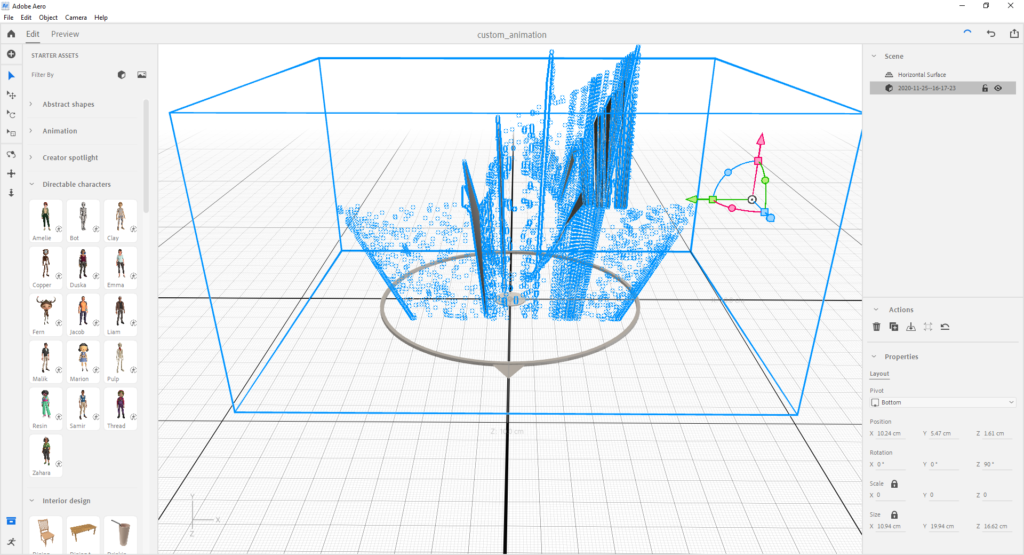

In another experiment, I recorded a 3D point cloud of my daughter playing on a playground using the iOS app Record3D. I was able to import the .gltf file and trigger the animation playback of the 3D video in Aero, but the .gltf doesn’t render correctly in the scene — some of the points appear as polygons. This may be due to the model/point cloud being too ‘heavy’ for Aero to handle, though if that is the case, there was no warning.

Though I find the resulting animation interesting, if I were an Aero user with a specific outcome in mind, I’d find it frustrating that a supposedly supported file format didn’t import correctly. Since there isn’t a lot of documentation for Aero at the moment, it would be hard to know where to start debugging an issue like this.

Publishing Directly from Aero

The most powerful feature of Aero is a one-click publication solution, where the experience can be easily exported to a .usdz file, which can be opened directly on iOS devices. Though Android mobile devices cannot directly handle .usdz files yet, there is no barrier to Google doing so, since the file format specification is open source. So, hopefully, Aero will one day be able to export cross-platform experiences.

Creating Standalone Experiences

One feature of Aero that I haven’t yet tried, but I’m quite excited about, is exporting a .usdz file from Aero for use in XCode’s Reality Composer. Using this workflow, it’s possible to build an ARKit enabled iOS app or an AR Quick Look experience. So, if you need a relatively simple iOS AR app, you may be able to create a bespoke AR app using Aero. If you’re interested in trying this out, you can follow along with this article: Work with Xcode and Reality Composer.

Final Thoughts

AR, more than other media, is really poised to become an artist’s playground, with the proper tools in place. Aero goes a good distance towards making these tools available, and the desktop release provides a fluid environment where creators can experiment with AR. I’d love to see some improvements to Aero’s behavior system, and also hope for a time when Google introduces .usdz support for Android, enabling publication of cross-platform experiences from Aero.

If you’d like to learn more about Aero, the sessions from the 2020 Adobe MAX conference are available on-demand, and Aero itself is free to download for iOS, as well as via Creative Cloud for PC and Mac.