If you’re the sort of person that watches makeup tutorials on YouTube or checks out beauty products on Pinterest, you may have noticed a recent uptick in AR try-on applications popping up around the web. And if you’re not that sort of person, pay attention — try-on AR is one of the most powerful and ubiquitous forms of augmented reality, and it’s only gaining traction.

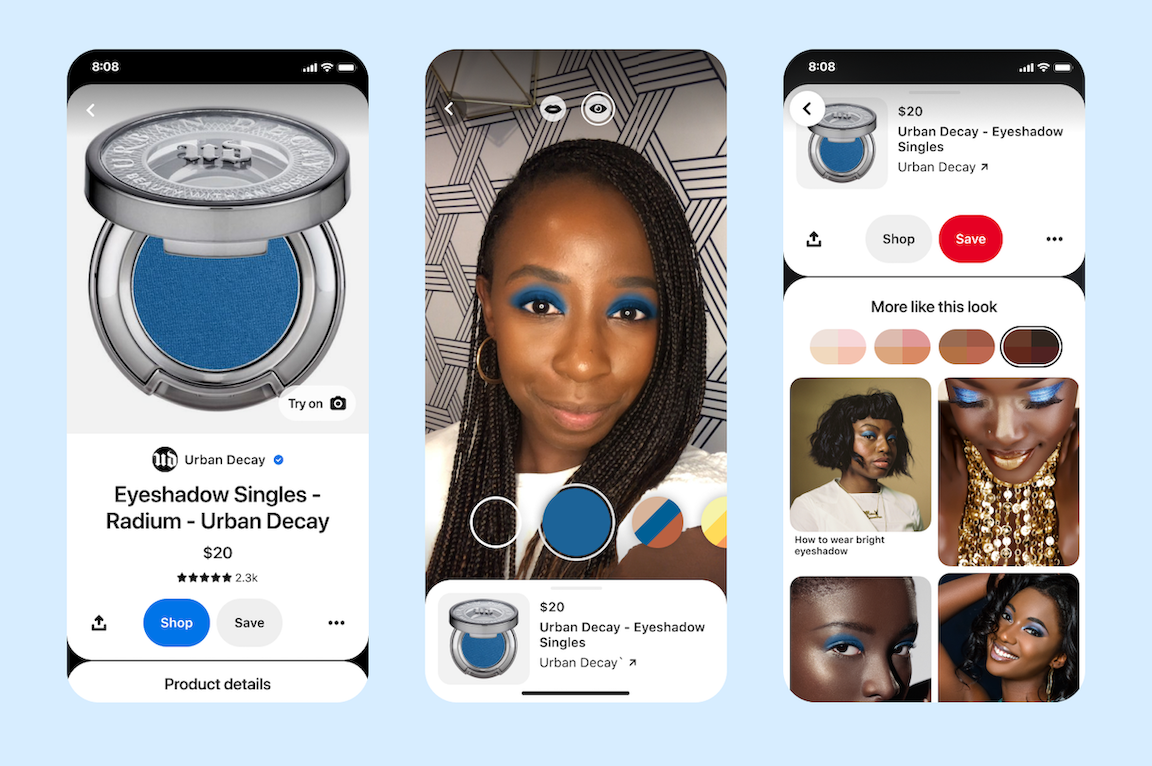

Last year, Pinterest brought lipstick swatches to it’s platform via AR try-on, and in January 2021, they added eyeshadow try-on to the mix. Users can browse eyeshadow styles or else choose a full look that integrates complementary eye and lipstick choices.

Pinterest is offering try-ons of 4,000 shades of eyeshadow from a selection of cosmetic company partners. This adds to the 10,000 lipstick varieties previously available.

The feature extends Pinterest’s Lens camera, which is a camera integrated with visual search. When using the Android or iOS Pinterest app, users can seamlessly enter into AR when they browse relevant Pins or do relevant searches.

After trying cosmetics on, you can buy the lipstick or eyeshadow directly from the Pinterest app.

The feature is especially relevant to Pinterest, with its large number of female users and the fact that half of Pinterest users open the app to shop — more than twice the amount of other platforms. A study showed that 72% of customers have bought something they hadn’t planned on due to an AR experience, so combined with the existing buyer intent on Pinterest, the potential to drive sales is high.

Interestingly, Pinterest is not charging it’s beauty partners for access to the feature, nor does it receive a cut of sales from AR try ons. Instead, Pinterest continues to rely on advertising revenue, and the company benefits from the addition of the try-on feature primarily through increased engagement.

Frictionless shopping

A lot of the utility of try-on AR comes from its ubiquity. Customers who are used to playing around with the face filters or lenses on Facebook/Instagram or Snap easily adopt try-on AR. Try-on AR is marketing that feels like fun, and often leads directly to purchases. Pinterest reports that users are 5 times more likely to show purchase intent after trying on cosmetics using their AR tools than after engaging with standard pins.

Augmented reality’s role is even more essential now, as shoppers are not currently able to try beauty products like lipstick and eyeshadow in stores. Even as stores reopen and restrictions are lifted, it’s unlikely that many consumers are going to be eager to use sample products in stores any time soon (if ever).

AR try-on offers an experience that increases customers’ interest in the brand and engagement with the product, even beyond the practical use of the AR try-on.

Right now, AR features benefit from a novelty effect — it’s attention grabbing. This will fall off eventually, but for now, adding an AR tool allows a company to differentiate itself.

AR Across the Social Sphere

Pinterest is not the only platform that has recently added cosmetics try-on features to it’s platform. YouTube and Google Search now provide AR effects for beauty-related queries.

Google initially launched a beauty try on via Youtube, where the AR feature was available in conjunction with relevant videos by beauty influencers. Later, in December 2020, Google expanded the feature to the native search on mobile devices. As with Pinterest, Google’s solution uses data from ModiFace to enable the AR feature, which appears when people search for relevant products.

Like with Pinterest, Google does not consider the AR experience to be an ad format, and companies are not paying to participate in the feature. Instead, this is a move on Google’s part to increase the use of its shopping tab, which now provides listings for free, while they were previously restricted to paid sources — limiting both range and quality of listings.

(In December, Google also piloted an car-related AR feature on its native mobile search client, so it seems like shopping-related AR is something they’re experimenting with on a number of fronts.)

Social media platforms, most notably Snapchat, have driven the growth in AR adoption. Facebook and Instagram have made a significant investment in AR, either to complement an overall strategy that embraces the power of XR, or to undermine Snap, or both.

In January, TikTok revealed an AR filter that used the iPhone’s LIDAR sensor to create a more convincing effect, extending the existing Brand Effects feature. Though there aren’t many try-on experiences on TikTok that I am aware of, I also imagine that these will appear with time.

With Pinterest and Google making these investments, it’s more unusual to see a social media platform that is not taking advantage of AR than one that has embraced the medium.

Try-On AR is Not Just for Beauty

Outside of social platforms, there are a range of try-on apps that serve the beauty industry. Sephora has launched Virtual Artist, Ulta developed GLAMLab, and Target has a virtual makeup feature in it’s Beauty Studio. Essie, the nail polish company, has recently launched a WebAR based try on project, where visitors to the company’s web page can try on 75 different colors of polish without the need to download an app or use another social media company’s platform.

Bu try-on AR isn’t limited to cosmetics — Warby Parker offers augmented reality try-on of their glasses via their iOS app, for instance. And, beyond facial recognition, there are a growing number of brands, from Gucci to Nike, that offer AR try-on for shoes. Mirrar is a platform that facilitates jewelry try-ons, both via physical displays and via WebAR.

There are real limits to AR try-on, though. It’s possible to correctly place virtual objects on some body parts, including feet and hands, and most commonly faces and heads. But this is because, especially in the case of face tracking technology, there is a large amount of existing deep learning work enabling these AR applications to be built — facial point detection was one of the earliest well-studied applications of computer vision.

A lot of shoppers would love to be able to try on clothing using AR, but for now, this isn’t really possible. Body point tracking and pose estimation that would enable garment try-on is not as advanced as face point tracking. While it is straightforward to translate something like a pair of glasses into an accurate and convincing 3D model, many garments are more complex, and require sophisticated cloth simulations to accurately convey fit and drape.

Nonetheless, retailers like David’s Bridal have started to invest in AR technology. Instead of attempting to place a dress on the potential bride (a near-impossibility given current technology), their AR feature allows customers to visualize dresses in their homes at true-to-life scale. Though wedding dresses still need to be tried on, David’s Bridal reports that they have gotten a 100% increase in in-store appointment conversions as well as a 30% increase in revenue from the tool. The fashion industry will be truly transformed as AR try-on applications become a possibility for a wider range of products.

Hands-on with Try-on

So far, we’ve talked about a lot of large companies’ try-on projects, but what can independent creators do if they want to create try-on applications?

Social Options

By far, the least friction comes from designing face filters or lenses using the creator tools that social media companies provide. This allows you to create something that anyone who uses Facebook/Instagram or Snapchat can potentially engage with. Snapchat’s Lens Studio and Facebook’s Spark AR Studio are both tools that are powerful yet relatively easy to start working with, and require no coding skills.

There are always companies hoping to use these tools for their marketing efforts, so if you become proficient in creating filters or lenses using these tools, you may be able to get paid for branded creations. Snapchat has recently begun making payouts to engaging content, so you might additionally benefit by making an interesting lens and then using it to create interesting videos.

With this large reach, though, you are restricted in that you don’t ultimately own the platform, and your content can be removed by the platform provider. If you want to share your work in another format, either on the web or as part of a native app, there is no way to gracefully exit these ecosystems — you will have to re-create the effect using an entirely new set of tools.

Still, these tools are worth using, both to test users’ interest in certain types of filters and effects, and also as a marketing channel — if you’re selling glasses or headwear, for instance, you can easily use these tools to get social media users excited about seeing themselves in your product. Then, you can direct them to a more in-depth experience on your webpage, and towards a purchase.

Spark AR Studio

Facebook and Instagram filters are created using their Spark AR Studio, which is available here: https://sparkar.facebook.com/ar-studio. A recent course on creating AR filters using Spark AR Studio is a comprehensive approach aimed at business users — https://sparkar.facebookblueprint.com/student/catalog. For a more relaxed set of tutorials, check out the main ‘Learn’ page for Spark AR, here: https://sparkar.facebook.com/ar-studio/learn/.

Lens Studio

Lens Studio for Snapchat offers a wider range of effects, including ways to extend the tracking types available by training custom machine learning models. Better yet, they offer a number of tracking types such as foot tracking (provided by Wannaby), face mask detection, and ground/sky segmentation, all ready to go. You can also perform skeletal (pose) detection, and hand tracking and segmentation.

In fact, the just-released Lens Studio 3.4 includes full-body segmentation and 3D multi-body tracking so that you can apply costumes to , as well as better hand tracking, which will allow you to apply watch or jewelry augmentations.

So if you’re interested in doing a try-on app that involves something other than face augmentation, Lens Studio is a great place to start, especially with the recent updates. You can check out Snap’s guides for Lens Studio here: https://lensstudio.snapchat.com/guides/

Native AR Try-On Options

If you’re interested in building a standalone face-tracking AR app for either Android or iOS, you are most likely going to reach for a game-engine based approach. The most popular choices of engine are Unity and Unreal, though Unity has a real edge in augmented reality.

Your options are restricted if you’re trying to build a standalone native app and you don’t want to get involved with the coding side of things. Many of the low- or no-code options for AR are usable only for placing virtual objects into the environment, and don’t yet include face-tracking or other body tracking options.

One option is the Face Tracking template in Unity MARS, which can be implemented without coding knowledge. However, MARS is a rather expensive ($600/year) subscription, so unless you are creating AR face effects as a way to make money, this is likely too much of an investment.

If you want to do something extremely simple, it’s possible to build something in Unity (or Unreal) using the base AR functionality that ships with the game engine. You can see an example of this in the following tutorial, which uses Unity’s AR Foundation — it’s high-level cross-platform AR api.

However, note that you won’t be able to add any features such as switching the face mask image or sharing a photo to your camera roll without at least a little bit of coding, so the kind of app you’ll be able to make this way is limited.

Unreal Engine provides a Face AR Sample template, but this is usable only on iOS via ARKit. The project is the basis for Unreal’s interesting LiveLink project, which allows you to animate characters using facial performance capture. The template is set up with Blueprints, so no coding is required.

In all of these examples, it will take a reasonable level of engine knowledge to move beyond what is offered by default in the templates.

One interesting looking option that I haven’t yet investigated personally is DeepAR Studio. This application allows you to create four types of effects — Rigid objects, Deformable masks, Morph masks, and Post-processing effects — inside the Studio tool, and then build them for devices using the DeepAR mobile SDK. Unlike other SDK options such as ModiFace Beauty AR SDK, Banuba, and Visage, DeepAR’s pricing is both transparent and reasonable.

If you are willing to do some coding, your options quickly expand — you can access face tracking for iOS and Android in Unity using ARFoundation. ARFoundation is a useful high-level API for building for a range of devices, including mobile AR and also certain wearables like Hololens or Magic Leap. Here’s a project to get you started building face-tracking apps with ARFoundation.

WebAR Face Augmentation

For a true cross-platform AR solution that allows you to reach iOS and Android users, as well as those using computers, WebAR is the best option. Because WebAR is accessed via a regular web browser, there is no cumbersome download of an app — your AR experience is immediately accessible.

WebAR is accessible to a much wider range of devices than native apps — native support for AR face detection in ARCore and ARKit is restricted to a subset of higher-end phones and tablets, and not usable for laptops/desktops with webcams.

Both the wide device compatibility and the browser-based accessibility of WebAR makes it a fantastic choice for marketing generally and try-ons specifically. Use of WebAR is growing quickly, and I expect that within the next year or two, WebAR experiences will predominate marketing applications of AR.

Though there are a number of tools to facilitate WebAR development, the options are more limited in the face-tracking domain. The options should grow over the next few years as the popularity of WebAR development increases, but for now the options are spread across more generalized face tracking SDKs such as ModiFace or Banuba mentioned above, or WebAR-specific tools.

Unfortunately, many of the available tools are expensive. 8th Wall is a well-known and commonly used WebAR platform with face tracking capability, with very expensive pricing for commercially deployed applications, and a not-terribly-generous trial/small creator tier. However, 8th Wall has name-recognition in the industry, and has been adopted by larger agencies and well-funded marketing operations. 8th Wall also requires a fair amount of Javascript coding background, so it’s not easy to get started, unless you’re already a web developer.

For those with little or no web development background, a better option is ZapWorks. ZapWorks offers several authoring tools, including ZapWorks Studio which allows you to build simple face-tracking AR experiences with no code. ZapWorks has a budget-minded small-creator tier and a free hobbyist plan to get you started. ZapWorks Studio projects can be simultaneously deployed to the Zap app, as well as to WebAR, where they are activated via QR code.

If you’re comfortable with Javascript and interested in a free, open source solution for WebAR face tracking, consider Jeeliz. Their virtual try-on and face filters starter apps are both available on Github, where they can be forked and modified to your liking.

WebAR is the future of AR try-on, since your experience won’t depend on the largesse of social platforms or your users’ device choice or their willingness to download an app, so it is definitely worth getting acquainted with.

Choosing your Platform

If you’re just getting started in AR without existing coding skills and you don’t have any specific use-case in mind (i.e. you’re not in business selling sunglasses), getting started with one of the social platforms is likely going to be more satisfying and will have a gentler learning curve. You’ll be able to share your creations with a wide audience and get immediate feedback on what does and doesn’t work.

Between Lens Studio and Spark AR Studio, Lens Studio (Snapchat) is the more robust platform. So if you have friends on both networks, going the Snapchat route gives you more fun tracking types to play with.

Getting users to download and interact with a native app can be challenging, but if you’re envisioning a larger-scale AR app and think you can generate an audience for it, you may want to choose a game engine. If so, there are a larger number of third-party SDKs with Unity packages available, and Unity is generally more AR-focused, so it’s very likely the better choice unless you’re already familiar with Unreal.

Finally, WebXR is the platform that will dominate in try-on applications in the next few years. Devices and tools are only improving, and the user experience improvements of WebAR over native apps, as well as the removal of platform-dependence vs. social platforms will make this the right choice for many companies and smaller creators. If you’re not afraid of coding, using an open-source tool like Jeeliz might be a great choice, though today, many AR developers are being hired to work with expensive tools like 8th Wall. Otherwise, ZapWorks may give you a starting point, and it’s likely that there will be many more low- or no-code options to come.

Whichever platform you choose, try-on AR is a great opportunity for fashion and beauty companies to get customers excited about their products, and a great way for creators to get involved in building useful and fun AR experiences.