For those new to the immersive technology space, a first question is often about all the abbreviations used to describe the various technologies — VR, AR, MR and XR. For aspiring developers, having a good sense of the technological landscape will give you a better idea of how to start on your creation journey.

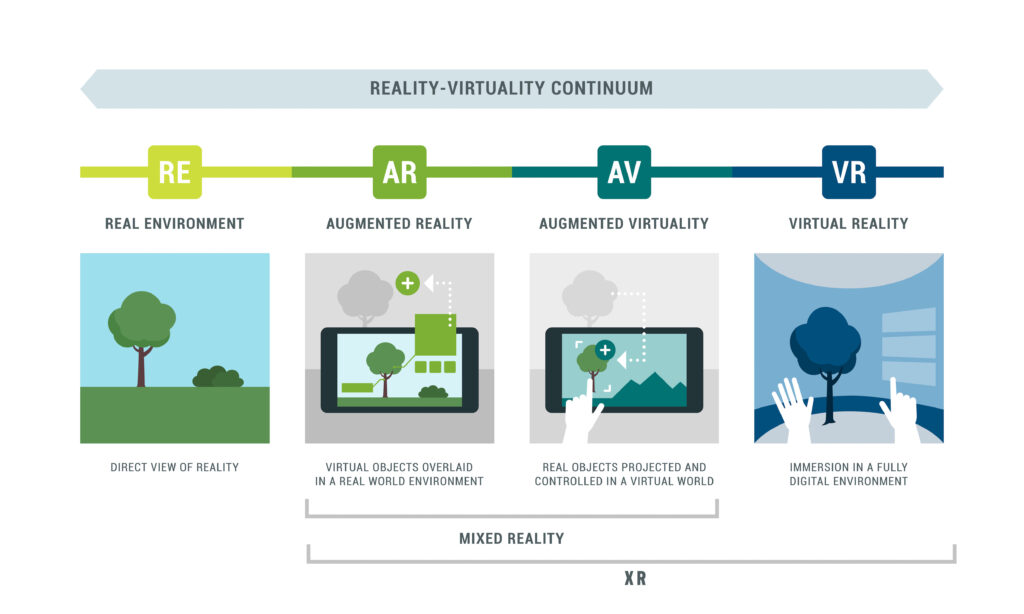

Researchers and practitioners who discuss these technologies refer to a virtuality spectrum, which provides a useful visualization of how AR, MR, and VR relate, even if the real-world use of these terms can be a bit looser. The idea of the reality-virtuality continuum was introduced by Paul Milgram and others in 1994, so while the consumer technology is new, the framework has been in use for over 25 years.

Before we begin, a caveat — I describe here the way I think about the technologies in the immersive technology space — other creators and companies describe things a bit differently. Yet, I hope this will give you a good start if you’re just getting your bearings in XR.

Since this site’s name includes ‘XR’, and because it is my preferred term for describing this space, let’s start there. So, what is XR?

XR — Crossing the Virtuality Spectrum

You might have heard that the ‘X’ in XR stands for extended, as in ‘Extended Reality.’ In fact, that’s the current Wikipedia definition of XR.

My preference is to interpret the ‘X’ as a stand-in for any of the other letters, sort of like the ‘x’ variable in algebra. In this way, XR encompasses all the other technologies — AR, MR and VR. That’s why this site’s name is CreatXR — I wanted to be able to discuss creating experiences across the full range of the virtuality spectrum. Either ‘extended’ or a placeholder, XR is the widest term, encompassing augmented, mixed and virtual realities.

Before we get into the differences among these abbreviations, you might wonder what it is about these technologies which connects them into a single topic. Across the spectrum, XR technologies have a few things in common. Instead of traditional computer inputs, XR technologies use physical movement as a primary input. With VR, the motion of your head is the primary input, as it is with headset-based AR. With mobile AR, the motion of the device in your hand is the primary input. So in addition to the varied degrees of virtuality, the XR space comprises a new model of human-computer interaction.

Now let’s expand upon VR, AR and MR.

VR — Virtual Reality

VR stands for Virtual Reality, and describes an experience where the user’s visual and auditory senses are cut off from the real world. Virtual Reality aims to completely immerse the user in a virtual world made from volumetric 3D models, 360 video or images, or some combination of these. The user may wear headphones that largely block out sounds from the outside world. The intention is to completely replace the real world with an immersive experience that feels real, even though the stimulus is computer generated.

Although there are some non-head mounted VR systems, such as the CAVE, most VR is experienced using an HMD (head mounted device, also called a headset). Some examples of VR devices include Oculus Rift and Quest, Vive, Index, PSVR, and (slightly confusingly) Windows Mixed Reality headsets. There are smaller movers in this space too — such as the Pico Neo, as well as headsets that were in wide circulation but are no longer getting continued support, such as Google Daydream, Oculus Go and GearVR.

Mobile VR, which uses a smartphone slotted into a Google Cardboard or more sophisticated headset such as the GearVR, is still a viable medium, though it has fallen out of the public eye to a large extent. Nonetheless, Cardboard and other mobile VR is still much in use in certain spheres, such as for educational applications.

Virtual Reality experiences include a wide selection of indie and AAA games, but also include experiences that serve a range of purposes in travel, education, data visualization, engineering, design, training simulation, and more.

AR — Augmented Reality

Augmented Reality seeks to place computer generated imagery and or audio into the user’s real-world environment. AR experiences are designed for either mobile device platforms or for headsets. Unlike the case in VR, the more mainstream uses of AR are still focused on the mobile space, due to the high cost of AR HMDs, which place them out of the reach of most consumers.

Mobile AR can be experienced on high-end mobile devices, both iOS and Android, facilitated by either Apple’s ARKit SDK (software development kit) or Google’s ARCore SDK for Android. The most well-known AR app on the market is Pokemon Go, which is still probably most people’s best reference point for AR. Yet mobile AR also boasts a range of uses, including simple AR measurement tools, stargazing assistants, art creation programs, and a large number of shopping and marketing apps.

On the HMD side of things, the leaders in the AR space are Microsoft’s Hololens and Magic Leap’s Magic Leap 1. Though these were both initially positioned as general purpose AR devices, they failed to find adoption in the consumer market, both because of lack of compelling content and due to pricing issues — the Hololens2 costs $3,500 for just the device, and the Magic Leap 1 starts at $2,295. It’s possible that with some lower-cost entries in the AR HMD space, such as the NReal Light and Apple’s long rumored AR glasses, we’ll see more widespread AR HMD use in the consumer space.

Another possibility for the future of headset-based AR is that we will see greater adoption through the use of pass-through video AR. Pass-through AR uses an opaque headset, unlike the see-through optically based headsets like the Magic Leap 1 or the Hololens. Using pass-through video technology solves many of the technical challenges that have held AR HMDs back thus far.

Headset-based AR is well suited to training purposes, engineering applications, and augmenting challenging work environments with contextual information, such as seamlessly providing surgeons with patient information.

Augmented Virtuality?

You may notice that of the 4 major points on the virtuality spectrum, one — Augmented Virtuality — is rarely discussed. Augmented Virtuality is when you add back elements of the real world to a mostly virtual scene. Currently, the most common examples of this occur in productivity apps. When you use a workspace in Virtual Reality, the contents of your computer monitor, and possibly the location of your keyboard, are reflected in the virtual space, allowing you to work more seamlessly in a virtual environment.

A number of VR headsets now have front-facing cameras that could be used to add back real-world information. The Oculus Quest’s cameras are used mainly to help map your play space and for hand tracking, yet it’s possible to imagine something more advanced in the future. Using computer vision, certain types of features might be selected from the real-world environment and added to the virtual scene.

Pass-through video AR headsets might lead to more experimentation in this realm. For now, developers aren’t describing a lot of compelling use cases for Augmented Virtuality — perhaps if hardware improvements open up new possibilities in this domain, some more thought will be put into Augmented Virtuality content.

MR — Mixed Reality

Mixed Reality describes anything that is neither purely virtual nor the real-world. By this definition, Mixed Reality would encompass both Augmented Reality and Augmented Virtuality, but doesn’t span the full virtuality continuum.

Yet, companies like Microsoft have used the term much more liberally. Microsoft markets Windows Mixed Reality, which it uses to describe both it’s Hololens devices, and a range of licensed 3rd party VR headsets made by companies such as Dell, Samsung and Acer. Although the term ‘Mixed Reality’ might describe Microsoft’s ambitions, the devices remain firmly on the AR side of the spectrum with the Hololens and on the VR side for the other headsets — the terminology is more marketing buzz than anything.

In a strict sense, there is no ‘pure’ VR — even pushing our current capabilities to the limits with VR treadmills and wind machines, there are still sources of real world inputs that invade the mostly virtual experience — room temperature, perhaps, or odors. On the other hand, without thinking about it, we augment our real world constantly with our mobile devices, getting on-the-fly contextual navigation from mapping apps, for instance.

For this reason, it’s plausible to say that Mixed Reality (and XR for that matter) run the whole length of the reality-virtuality continuum. But I don’t find this particularly helpful, so I prefer to stay with the more constrained definition of each acronym. So, for my purposes, MR doesn’t include VR, no matter what Microsoft may say.

Spatial Computing

Just to confuse things further, there are companies such as Magic Leap, which insist upon differentiating what they are doing with the Magic Leap 1 from capabilities of other AR devices by calling what they do ‘Spatial Computing’. Reasonably, anything along the virtuality spectrum, and even things that don’t fall within it could be called ‘spatial,’ so I don’t think this is particularly useful. Magic Leap is going through an identity crisis, so the vocabulary is probably intended to set the company apart from the competition and help justify the device’s price point.

Creating across the Virtuality Spectrum

You might wonder if the skills required for building experiences across the virtuality spectrum are the same. While some skills translate, there are a number of differences too.

Generally, the technological tools you’ll use to build in VR vs AR may be the same — for instance, you can use a game engine such as Unity3D or Unreal to build either AR or VR apps. You can also use a range of Web APIs which fall under the blanket term WebXR. But the precise skills you’ll need most are a bit different.

Since most AR experiences are still built for mobile devices, you’ll need to have more of a sense of what it is like to develop a more traditional native app, especially UI-wise. On the other hand, VR experiences have a whole range of UX challenges to confront that don’t pose a problem in AR, such as how to accommodate user locomotion and avoid simulator sickness.

If you’re sure which technology most interests you, you can get started learning those skills using the game engine or web framework of your choice. But if you’re not sure yet whether to focus on AR or VR yet, work towards getting a broad understanding of your development environment of choice — Unity, for instance — and you will be able to more easily move between platforms when you are more sure of your path.