For many forms of virtual and augmented reality content, a game engine is a key tool for app creation. Game engines are software that provides a pre-made solution to many interactive 3D tasks — rendering graphics to the hardware, handling audio, physics and input, for example. The game engine also provides an Editor environment that allows you to design your XR experience and implement custom behaviors.

There are forms of VR content that don’t rely too heavily on 3D engines — linear 360 videos fall into this category — but in most cases, XR creators will eventually need to gain at least some experience with a game engine.

So which engine is best for XR creators? There are many options, but two really rise to the top: Unity, and Unreal. Both provide tools for creating both AR and VR experiences as well as a large community of creators and learning resources. So, which should you choose?

If you’re not planning on making a XR game, don’t let title ‘game engine’ deter you — game engines are widely used for many purposes beyond gaming. Film productions, architectural renderings and machine learning tasks are all routinely created using game engines.

Because the game engine tool is so central to most XR development, one of the first decisions a new XR developer needs to make is where to start, engine-wise. For most new developers, the decision comes down to Unity or Unreal, though there are a few other options which I’ll mention at the end of this post. But since most XR development is done using one of these two engines, we’ll focus on the differences between the two.

Still, You may not need an engine —

Engines are most useful for building native applications — XR experiences that run on either a mobile device or are installed on a computer (via Steam, for example). WebXR enables XR for the web, and makes use of a very different set of tools and development ecosystem. You’ll typically use Javascript to build XR for the web rather than a game engine like Unity or Unreal. I hope to cover options for getting started with WebXR in an upcoming set of posts, so stay tuned if that’s what you’re interested in.

If you’re just beginning your XR journey, it’s easy to get paralysed by the engine decision. You really can’t go wrong with either of the two main contenders, Unity or Unreal. Though there are some differences that might sway you in one direction or the other, either will set you up for success in XR. It’s more important to choose and stick with the engine long enough to become comfortable and begin digging into XR design and development than it is to make the ‘right’ choice.

On the other hand, before embarking on a bigger project, it helps to know where your engine choice may affect development, and it will be worth thinking more closely about which engine to use. Taking some of the differences here into consideration, as well as experimenting with any contenders before selecting one, is a necessity before embarking on a large project.

Unity vs. Unreal, general differences

Let’s talk about the more obvious, as well as some more nuanced differences between the two engines. Unreal is made by Epic, and is the engine that powers Fortnite as well as a number of other FPS type games made by the company. The engine is generally associated with AAA console and PC titles. Unity is owned by Unity Technologies, and the company is focused solely on engine development. Unity was one of the first engines to support iPhone development, and for this reason, is often considered the go-to engine for mobile development. Unity is also very strong in the indie development sphere, with many notable indie titles having been created using Unity.

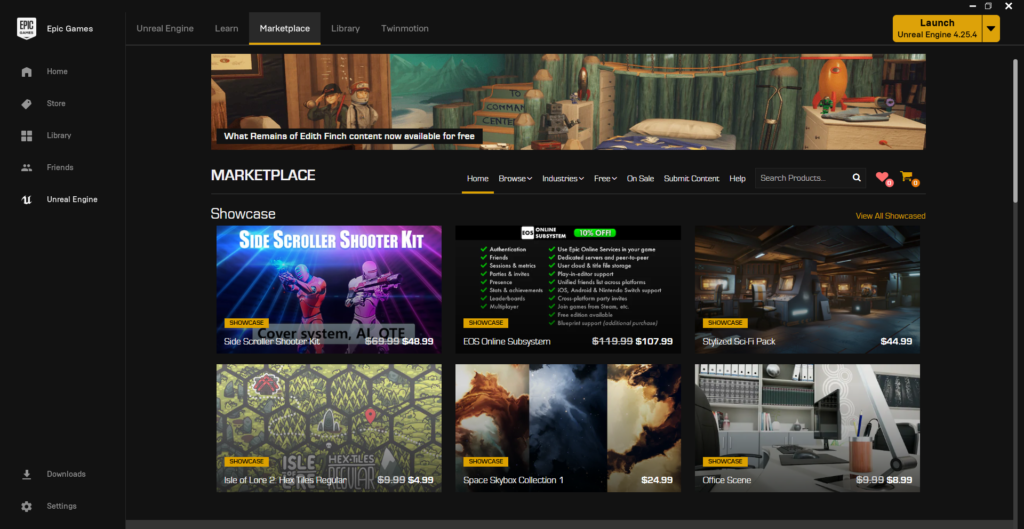

Epic has been able to build off of the success of Fortnite to improve the position of the Unreal Engine over the last couple of years. Unreal now waives all licensing fees on the first $1 million dollars in revenue a title makes, and provides generous grants to select developers. All Unreal Engine users can also benefit from free Marketplace assets each month, many of which can be very useful in prototyping and beyond.

Unity is in a less enviable financial position, and has moved to shore up its situation recently with an IPO. Though the company continues to bring in substantial revenue, it is not profitable, and thus there are fewer freebies. Yet, Unity’s pricing model is generally quite fair, especially for the smaller development teams that favor the engine. You can use a free Personal license until making $100,000 per year, and then you’ll need to pay $400/yr per seat — or more, once your revenue exceeds $200,000 per year. On the other hand, Unity takes no royalties, so the per-seat cost can be comparatively inexpensive, depending on the amount of revenue a title brings in. With Pokemon Go — developed in Unity — Niantic saved quite a bit by only paying a seat cost rather than a royalty on their billions.

Despite Unity’s less enviable financial position, both companies are stable, and developers can rely on them remaining viable throughout the long lifespan of a healthy project.

Rendering

One of the most common criticisms of Unity is that it is not very ‘pretty’ out of the box. Even the Editor interface is retro, and definitely less slick than Unreal. And it’s true that while achieving AAA-level graphics is possible in Unity, it takes more work and a higher level of skill than it does in Unreal. The new Universal Rendering Pipeline and High-Definition Rendering Pipeline go some of the distance towards improving the situation, but in general, you will have to put more effort into graphics quality in Unity.

Watching some videos comparing the two engines will soon show you that when scenes are created properly, both Unity and Unreal are quite capable of rendering beautiful visual experiences.

Project Scope

Due to its history as a solution for mobile titles, Unity is best suited to smaller projects rather than AAA blockbuster RPGs with sprawling scope. Hopefully you won’t make the mistake of taking on a massive-scale project without the experience and team to back this up, but when and if you do, Unreal might be the better of the two choices. On the other hand, Unity is right-sized for most XR projects, so unless you think you’re building Half-Life: Alyx (which was actually built with Valve’s own Source Engine), this is just fine.

Because AR projects tend to be smaller in scope than VR experiences, Unreal is probably overkill, even if it is perfectly serviceable in creating AR. This applies to 360 video projects and other light-weight tours or story-based VR as well, where Unity is the more appropriately scaled tool for the job.

Engine ‘Feel’ and Features

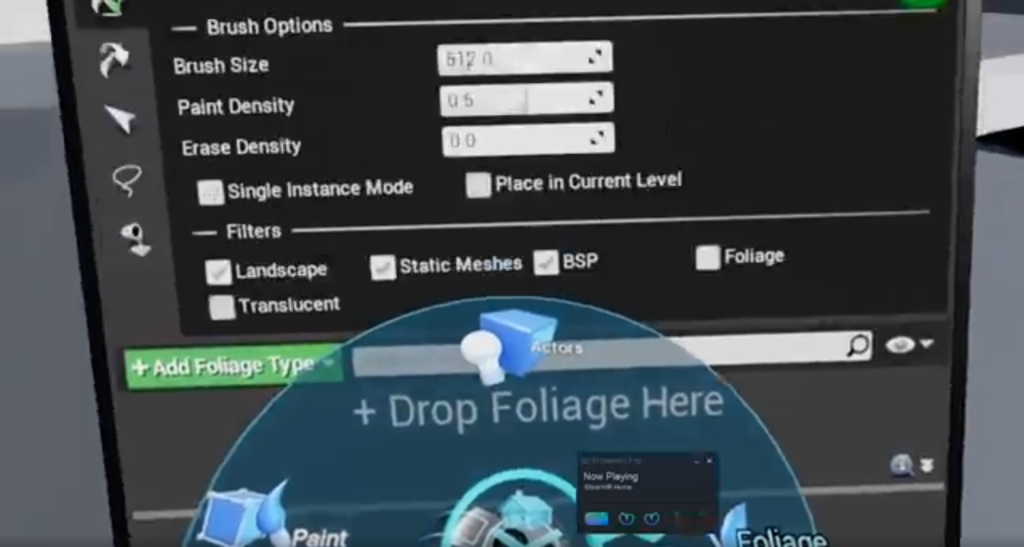

Unreal generally has a more coherent feel than Unity does, while Unity relies heavily on a plugin-based architecture, even for features that may seem like core functionality. For a long time, Unity did not provide developers any way to quickly prototype by creating simple geometry beyond the use of a few geometrical primitives (cube, sphere, etc.), while in Unreal it was possible to create custom geometry and apply some simple visual effects with ‘Paint’.

Unity now does provide this functionality for free, but it is still external to the core Engine — Unity acquired a set of plugins that were once sold on the Asset Store and has made them freely available, but they still must be installed separately through the Package Manager.

Of the features Unity is missing, the most glaring omission is a lack of coherent networking solution. UNet, the existing networking APIs and services is deprecated, and the newer ‘Connected Games’ solution is yet to launch, leaving anyone hoping to develop multiplayer to turn to external services. Though there are several popular networking solutions for Unity, such as Photon, it is frustrating that this functionality is not part of the engine to start with.

In the case of networking, the old UNet may be officially deprecated so that upcoming projects will all use the updated system (whenever it comes out, that is), but in most cases the strategy Unity has used as it adds systems is to keep multiple overlapping systems around and supported simultaneously.

In my experience, this is one of the most confusing aspects of using Unity — the overlapping and incompatible systems that can give the engine a bit of a Frankensteined together feel. Some major systems that have recently been introduced to make improvements to key portions of the engine include an updated input system and new rendering pipelines. Each of these additions are improvements on their own, but for someone who is used to the existing systems, the costs of switching can be quite high. Many existing plugins may only be configured to use the existing rendering pipelines, for instance, so that using some assets in your project will require reconstructing shaders in the new Shader Graph.

Newer systems naturally tend to have fewer tutorials or learning resources, so finding the support to complete a shader conversion task or to implement the new input system may be more difficult. Dealing with these sorts of mismatches can be frustrating, time-consuming, and if you’re not carefully making backups, can lead to lost work.

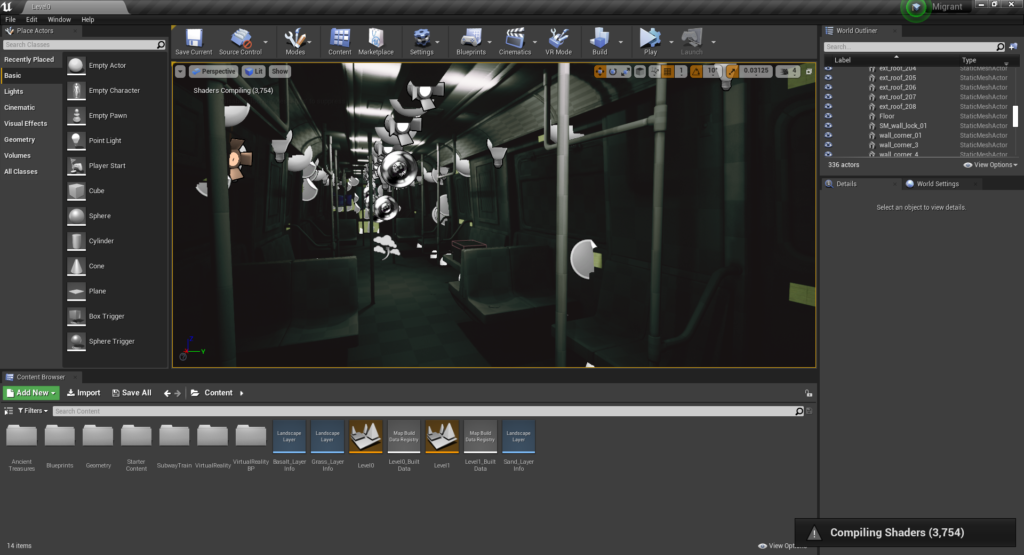

Unreal has a more cohesive feel in-Editor, but is a very ‘big’ engine, and can be very heavy on your development rig. Forget about installing Unreal on a laptop, while Unity will perform reasonably even on a 3 or 4 year old laptop of decent quality. If you’re developing VR, you may have a more powerful machine, so this may be more relevant to AR developers. Yet even on my development machine with a VR-ready GPU, I find that Unreal is endlessly compiling shaders, to an extent that my workflow gets interrupted. To be fair, Unity’s light baking can be similarly frustrating, but at least that can be turned to Bake only when desired. Unreal’s shaders render as gray until they have been fully calculated.

Scripting

In Unity, you’ll use C# to write code, while Unreal uses C++. People make more out of the difference between C# and C++ than is reasonable. Of the two, C# is easier to learn, and more concise, but I don’t think the difference in complexity should stop you if you’re otherwise more partial to Unreal — if you’re starting from scratch, either language will present challenges.

Both languages are serviceable for game development scripting, and also have broader applications which can make them worthwhile to learn. C# is used for web development (usually with the .NET framework) while C++ is used for a huge range of purposes, including operating systems, desktop applications, and embedded systems.

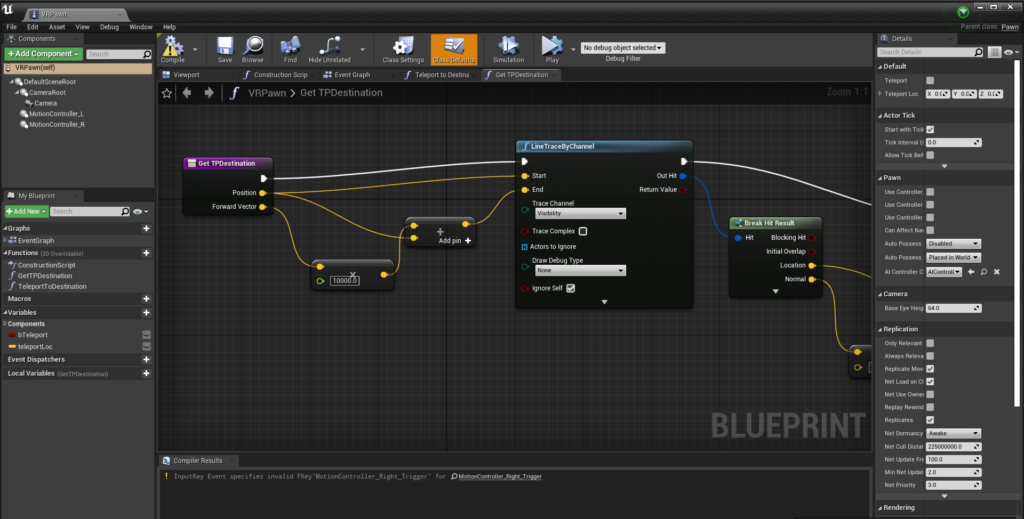

Beyond the scripting languages, Unreal has a robust ‘Blueprints’ visual scripting system, which can be used for almost all purposes if you are really resistant to code. Blueprints can be less efficient, and can cause optimization problems if not used correctly, but you should be able to build a performant XR application exclusively with Blueprints, especially if optimization is taken seriously.

Unity recently added the Bolt visual scripting system which is available freely via a plugin. There are fewer existing learning resources for developing Unity games and applications using Bolt, so at this point, if you’re interested in using a visual scripting system rather than coding, Unreal offers a more robust option for you.

Learning Resources

One area where Unity really shines is in the wide availability of tutorials and other learning resources to help you get started and progress in development. This may be due to Unity’s history as an engine that embraced the indie community early-on, while Unreal was really focused on AAA console titles (and thus, professional developers). More recently, the number of Unreal learning resources has expanded, but there are still many more options for learning Unity, and especially for tutorials and courses in the XR space. Unity is also used widely in university courses, and you’ll find that offerings on Coursera and other MOOC platforms heavily favor Unity — these types of courses can be a good way to get started in XR, especially if you find the format of a more formal course to be useful.

Both Unreal and Unity offer a number of educational resources to get you started, too. Unreal’s Online Learning Content Library is a free hub containing a number of shorter video tutorials on a wide range of topics, with many added each month. However, the offerings tend to be a bit more self-contained than long-form courses, and few are specific to XR. One notable exception that has boosted Unreal’s position is the Oculus VR Production for Unreal Learning Path that launched earlier this fall.

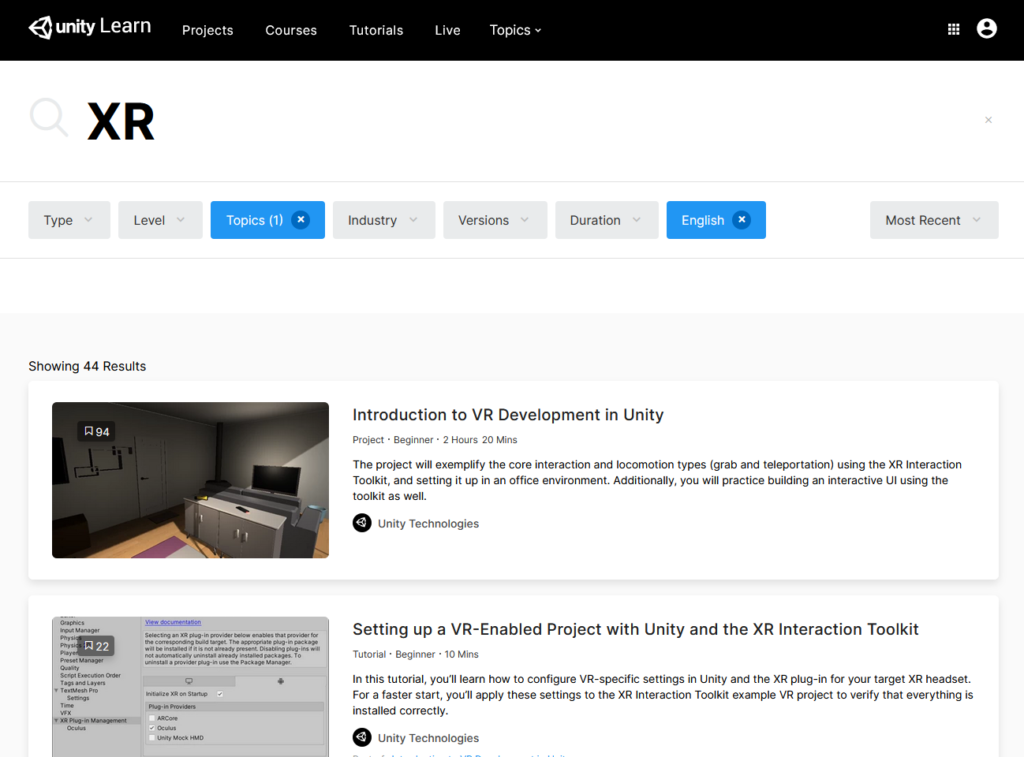

Unity Learn, on the other hand, offers an extremely wide range of learning choices, from quick tutorials to the epic 23-hour Design, Develop and Deploy for VR course, co-created with Oculus and comparable to the aforementioned Unreal Learning Path. While these resources were once paywalled, Unity made them all free starting in the Spring of 2020. In addition to on-demand courses and tutorials, many of which are relevant to XR, Unity also offers free live ‘Create with Code’ sessions, led by Unity instructors, which are a good way to get started with Unity, guided by a community and live video instruction.

Asset Store

Also stemming from the professional vs. indie focuses of the two engines, the Unreal Marketplace and Unity Asset Store have some differences in the types of content available. Generally speaking, the Asset Store has many more options, though the quality levels are also more varied. Asset Store content is generally priced more attractively, and there is a greater selection of nice time-saving plugins for XR developers, such as VR Interaction Framework.

The Unreal Marketplace excels in providing photorealistic assets of high quality, and the very generous Free-for-the-Month Assets allow developers to sample some otherwise expensive models. On the other hand, if you are looking for low-poly models for a mobile VR or AR project, or XR specific tools, there are fewer options available.

Source Code

Unity source code access is restricted to anyone not on a Pro plan, which runs at 150/month/seat, while Unreal’s source code is available on Github. This might not make much difference for most projects, but source code access can be useful at the end of a project, during optimization work. Still, this is probably not a critical point of difference for smaller development teams, and certainly not for most hobbyist developers.

That’s it for the general differences between Unity and Unreal, now let’s talk about their specific advantages and disadvantages in the XR space. I’ll start with Unity, and then head over to Unreal below — click here to skip to the Unreal section.

Using Unity for XR

Upsides

Unity is the first place most new developers look when they are thinking about creating XR content. There are a few good reasons for this — first, Unity was a mobile-first engine, and most early XR was mobile based. As the first set of PC-based VR headsets and first generation AR headsets launched, Unity made very early commitments to XR.

Another major reason that Unity is so popular for XR developers is that it is an accessible engine which is often used as a teaching engine in universities. There are tons of Unity tutorials out there — far more than for any other engine. Most questions you might have — about the Editor and its features, about coding, or about console errors — will turn up a number of resources on the Unity forums or Stack Overflow. Many of these tutorials and resources are dedicated to XR topics. Finally, Unity Learn has a large number of learning resources, many XR-specific, which are now all free. This wealth of information means that Unity’s learning curve is much more gentle than it would be otherwise.

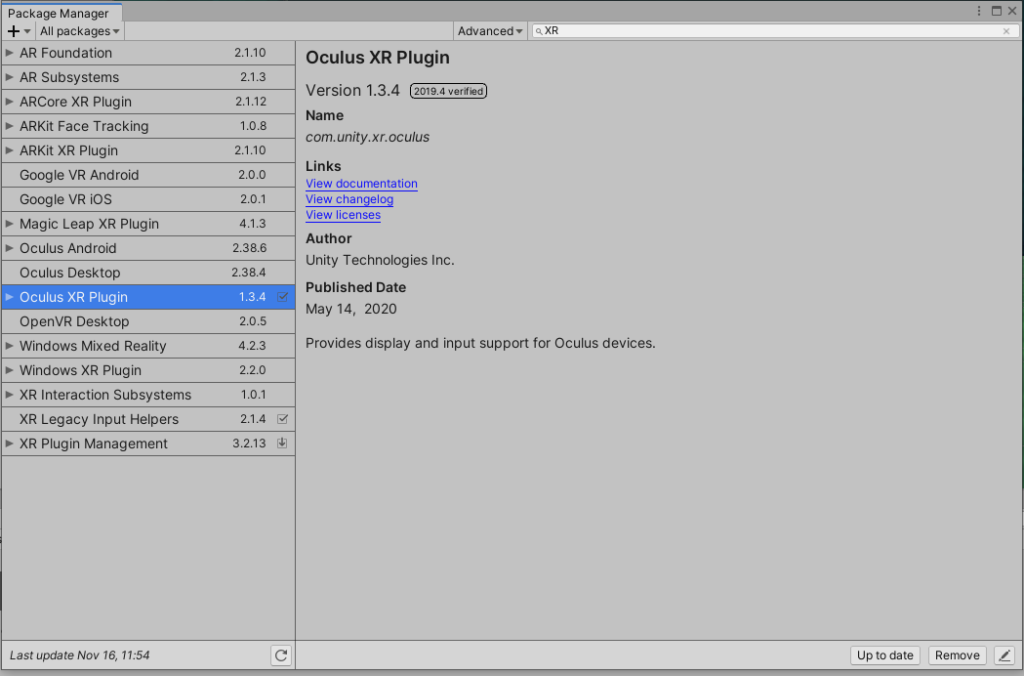

With 2019.3, Unity introduced the XR Interaction Toolkit, which abstracts some of the details of implementing UI and object interactions across multiple hardware platforms. The XR Interaction Toolkit allows developers to code a single set of application features which will work on many headsets, rather than having to repeatedly implement the same features for each platform.

On the AR side, Unity is an especially good choice due to the engine’s tight integration with ARKit functionality, the useful cross-platform tools in ARFoundation, a Unity package that allows you to develop for Android and iOS based mobile AR as well as Hololens and Magic Leap, all using a single codebase. If you expect to develop for multiple platforms, this can significantly speed your development.

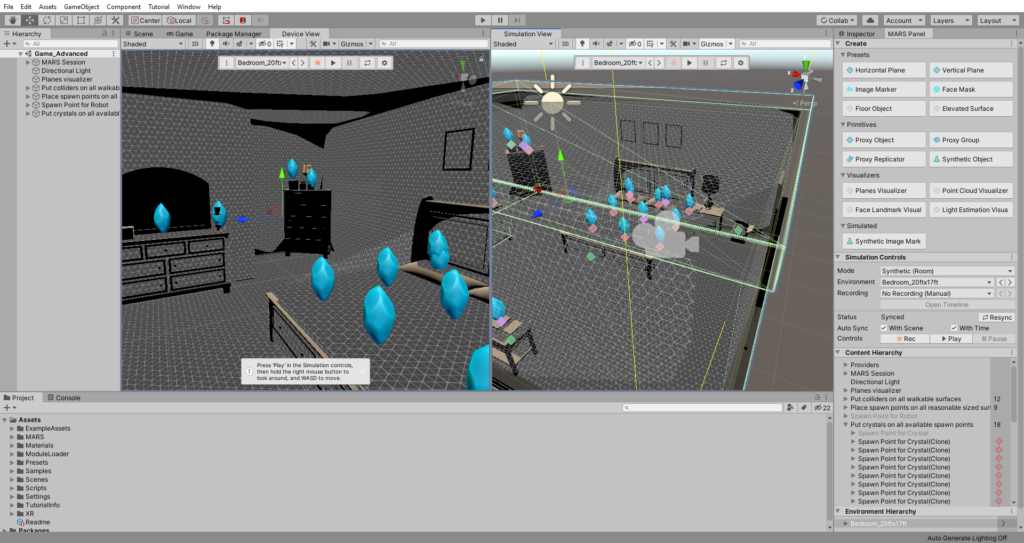

Unity has also made some significant commitments to developing tools to facilitate AR development, such as the recently released Unity MARS Editor extension, which presents a set of AR simulation environments and templates that may be useful for certain AR developers. Unity MARS is available at an additional cost of $50/month/seat, so it is probably not immediately relevant for many newer AR developers. However, the project indicates that Unity takes the AR space seriously and is making continued commitments to the medium.

Finally, if you have any inclination to experiment with WebXR, Mozilla has developed the WebXR plugin to allow you to build VR (though not AR) experiences for the web using Unity.

If you intend to freelance or seek employment in XR, rather than just work on personal projects, Unity is a strong choice. It is the most popular engine in XR, so the majority of freelance and professional opportunities use Unity.

Some examples of popular XR titles made in Unity include Beat Saber and Pokemon Go.

Unity Drawbacks for XR

In-Editor VR

One XR-specific frustration with Unity is that their once-hyped EditorXR feature, which allows you to develop VR scenes in VR, seems to have been nearly abandoned. In-Editor VR is a helpful feature for VR developers, since it allows you to experience and modify scale and world-feel as your users will experience it. Otherwise, your workflow will consist of repeatedly putting on your headset and launching VR, making modifications, and then repeating. After a while, this can feel tedious.

Unfortunately, the present EditorXR is clunky and is quite difficult to install. Despite being released over 3 years ago, it still remains an experimental feature and is only available on Github. More worrisome — there were no updates for EditorXR for nearly a year before the most recent bump to version 0.4.10 in September, so it appears that the team is focused elsewhere.

MARS Cost

One of the most exciting XR features that Unity released within the past year is Unity MARS, an Editor extension that facilitates cross platform AR development with a number of project templates and a in-Editor simulator which allows you to test your application’s functionality in a simulated environment without having to build to the device repeatedly. While the toolset is very promising and could significantly speed-up AR development (especially if you find yourself building to device multiple times an hour), the cost of MARS puts it out of the reach of many smaller developers. For many users, it was not clear that MARS was going to be a paid tool, so it was frustrating to discover that it came with a 600/year/seat pricetag.

Using Unreal for XR

Benefits

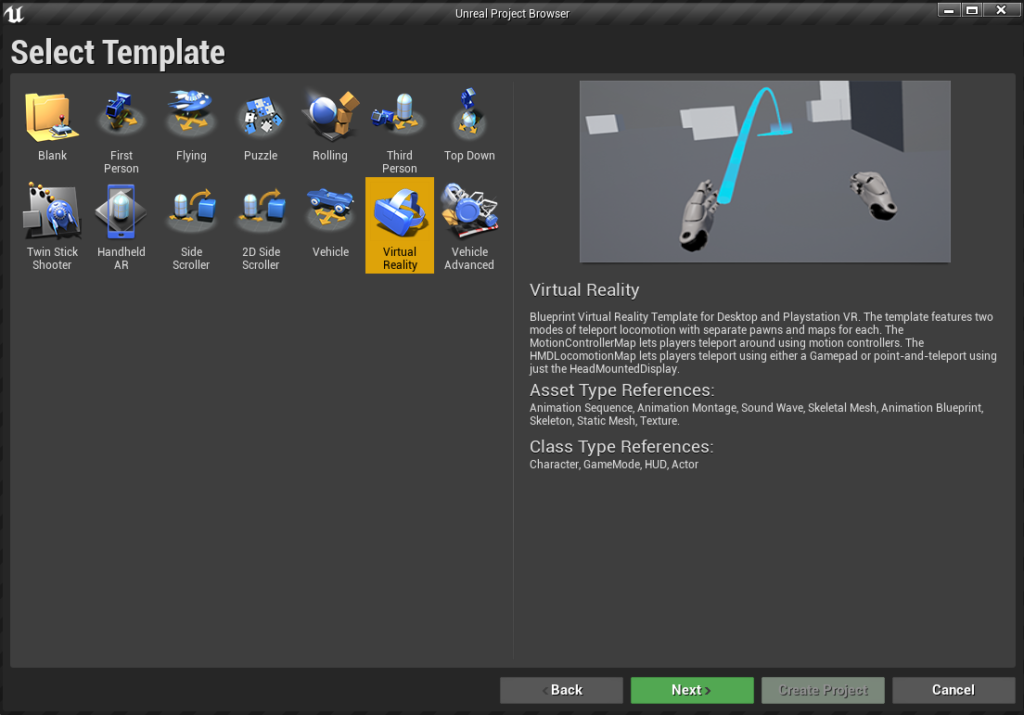

Unreal helps ease users into XR development via a set of project templates that allow you to get off the ground quickly when you create a new project. The VR template provides samples of both motion controller and HMD-only Maps (scenes), with appropriate Blueprints provided for each type of application, such as teleportation functions for each case. A number of simple VR interactions are also demonstrated, such as grabbing and throwing objects, and using a bat to hit objects. The included interaction Blueprints aren’t numerous, but they do include some of the most basic object interactions you’re likely to need.

On the AR side of things, there is a Handheld AR project template, which allows you to get started developing cross-platform applications for iOS and Android with plane detection, hit detection, and light estimation — all critical features of an AR application. There is also a simple UI to help manage the AR session.

Both the AR and VR project templates are Blueprint projects, but you are free to add C++ scripts to any existing Blueprint project. You’ll need to have at least a basic understanding of Blueprints to get a good idea about what is happening with the existing code, however. The templates don’t do much beyond handling the basic setup of your project, but in comparison with managing packages and setting up the basics in Unity, this feels quite straightforward and friendly.

Another advantage to Unreal in the XR space is the way that these default templates handle common physics interactions. It may be surprising, considering how frequently these mechanics are used in VR games, but creating convincing physics interactions in Unity is more challenging than you’d expect. Consider a tennis-like game, where the user holds a racquet that transmits the force of the user’s swing to a ball. Without some clever use of joints or workaround code, this interaction falls flat in Unity — the ball will register the same amount of force irrespective of the speed of the swing. In Unreal, however, the physics interactions between objects in the VR template are convincing ‘out of the box’. There are several niceties like this that makes Unreal more intuitive for VR creators without the use of additional Marketplace items or coding workarounds.

In stark contrast to the sad state of Unity’s XR Editor feature, the Unreal Engine VR Mode feature is one-click simple to use and much more streamlined a user experience with the mode enabled, too. There are a number of guides for using VR mode and getting started with the controls in the Unreal documentation https://docs.unrealengine.com/en-US/Engine/Editor/VR/index.html, and overall it feels as though it is a fully thought-out and supported feature of the engine.

While Unity is the more popular engine for XR work, those who get XR jobs or freelance gigs with Unreal seem to get a slightly better hourly rate. So your time learning Unreal may provide financial rewards, even if it is a bit harder to find XR jobs that call for it.

Some examples of XR titles built with Unreal include Moss and Tetris Effect.

Drawbacks for Unreal XR Developers

Unreal really isn’t a mobile-focused engine, and this means that it was slow to jump on the XR bandwagon (the earliest XR experiences were largely mobile-enabled). This lack of focus on the mobile space also means that it is much less oriented towards current AR development, which is still largely for mobile devices. This is likely to continue for a while, since HMD-based AR is not taking off terribly quickly, and even when they do, many AR device manufacturers prioritize support for Unity, releasing SDKs later or not at all for Unreal, perpetuating the preference for Unity among AR devs.

The fallout is that while Unreal is perfectly capable of building AR, there are very few resources available to help new developers get started. Unreal’s Online Learning Content Library has no AR tutorials, and there are few external resources either. Marketplace selections for AR-related plugins are quite scarce, with just a few highly-rated tools available. All this means that, especially on the AR side of things, you will need more background comfort with Unreal and a greater willingness to dig into documentation to make your app work.

So Which Should You Pick?

This choice used to be easy — the default answer for most XR developers was Unity. When I started working in XR, just 5 years ago, it seemed obvious that I should choose Unity, and it’s still the engine I’m most comfortable with. But Unreal has made up some major ground in XR, so that today, a significant proportion of VR games and experiences are created with Unreal. The decision is now much less straightforward, especially on the VR side of the spectrum.

Still, if you’re serious about both AR and VR, Unity has an edge. Unreal is behind in the AR space, and a number of hardware manufacturers don’t release SDKs as promptly or at all for Unreal, which really hampers your options.

On the other hand, if you are strictly interested in VR, Unreal might be the right way to go — Epic is in a strong position to provide support to developers, and the engine has a lot of upsides in its rendering capabilities, Editor VR Mode, and the ease-of-use its VR templates provide.

So that’s my verdict — if you’re interested in AR, stick with Unity unless you have a compelling reason to do something different. With VR, there’s no wrong decision, so choose based on what other traits move you the most. If you’re just getting started with programming or XR creation, you might find the wealth of tutorials and learning resources Unity has to offer is compelling. Other developers might choose Unreal for the in-Editor VR feature.